About Yandex Vision OCR

OCR stands for optical character recognition. Yandex Vision OCR is a computer vision service that enables image text and PDF recognition.

Vision OCR provides its features through API. You can integrate Vision OCR features into your app written in any language or send requests using cURL

Vision OCR operating modes

Vision OCR can process image recognition requests both synchronously and asynchronously.

- In synchronous mode, Vision OCR will process your request once it gets it and will provide you with the result in the response. This mode is good for apps that need to communicate with the user. However, you cannot use Vision OCR synchronous mode to process large pieces of information.

- In asynchronous mode, Vision OCR will get your request and immediately return the operation ID you can use to get the recognition result. Recognizing text in asynchronous mode takes more time but allows processing large volumes of information in a single request. Use asynchronous mode if you do not need an urgent response.

Recognition models

Vision OCR provides various models to recognize different types of image and PDF text. In particular, there are models for normal text, multi-column text, tables, handwritten text, or common documents, such as passport or license plate number. With a more suitable model, you get better recognition result. To specify the model you need, use the model field in your request.

See below for the list of available recognition models:

page(default): Suitable for images with any number of text lines within a single column.page-column-sort: Use it to recognize multi-column text.handwritten: Use it to recognize a combination of typed and handwritten text in English or Russian.table: Use it to recognize tables in English or Russian.

Models for recognizing common documents:

passport: Passport data page.driver-license-front: Driver license, front side.driver-license-back: Driver license, back side.vehicle-registration-front: Vehicle registration certificate, front side.vehicle-registration-back: Vehicle registration certificate, back side.license-plates: All license plate numbers in the image.

Language model detection

For text recognition, Vision OCR uses language models trained based on specific languages. Vision OCR selects a suitable model automatically from the list you provide in your request.

Only a single model is used each time you recognize a text. For example, if an image contains text in Chinese and Japanese, only one language will be recognized. To recognize both, send another request specifying the other language.

Tip

If your text is in Russian and English, the English-Russian model works best. To use this model, specify one of these languages or both in text_detection_config, but do not specify any other languages.

Image requirements

An image in a request must meet the following requirements:

- The supported file formats are JPEG, PNG, and PDF. Specify the MIME type

mime_typeproperty. The default value isimage. - The maximum file size is 20 MB.

- The image size should not exceed 20 MP (height × width).

Response with recognition results

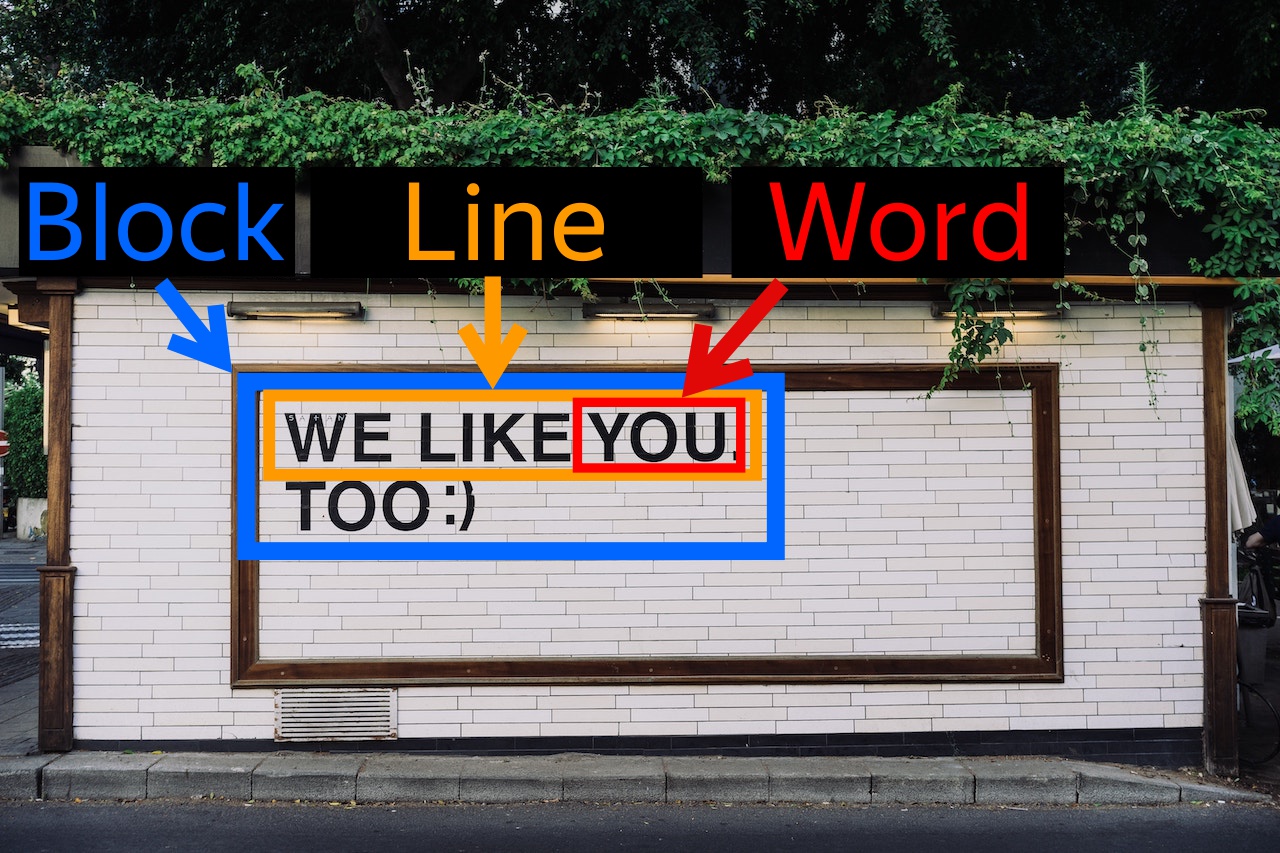

The service highlights the text characters found in the image and groups them by level: words are grouped into lines, lines into blocks, and blocks into pages.

As a result, Yandex Vision OCR returns an object with the following properties:

- For

pages[]: Page size. - For

blocks[]: Position of the text on the page. - For

lines[]: Position and recognition accuracy. - For

words[]: Position, accuracy, text, and language used for recognition.

To show the position of the text, Yandex Vision OCR returns the coordinates of the rectangle that frames the text. Coordinates are the number of pixels from the top-left corner of the image.

The coordinates of a rectangle are calculated from the top-left corner and specified counterclockwise:

1←4

↓ ↑

2→3

Here is an example of a recognized image with coordinates:

{

"result": {

"text_annotation": {

"width": "1920",

"height": "1280",

"blocks": [{

"bounding_box": {

"vertices": [{

"x": "460",

"y": "777"

}, {

"x": "460",

"y": "906"

}, {

"x": "810",

"y": "906"

}, {

"x": "810",

"y": "777"

}]

},

"lines": [{

"bounding_box": {

"vertices": [{

"x": "460",

"y": "777"

}, {

"x": "460",

"y": "820"

}, {

"x": "802",

"y": "820"

}, {

"x": "802",

"y": "777"

}]

},

"alternatives": [{

"text": "PENGUINS",

"words": [{

"bounding_box": {

"vertices": [{

"x": "460",

"y": "768"

}, {

"x": "460",

"y": "830"

}, {

"x": "802",

"y": "830"

}, {

"x": "802",

"y": "768"

}]

},

"text": "PENGUINS",

"entity_index": "-1"

}]

}]

}, {

"bounding_box": {

"vertices": [{

"x": "489",

"y": "861"

}, {

"x": "489",

"y": "906"

}, {

"x": "810",

"y": "906"

}, {

"x": "810",

"y": "861"

}]

},

"alternatives": [{

"text": "CROSSING",

"words": [{

"bounding_box": {

"vertices": [{

"x": "489",

"y": "852"

}, {

"x": "489",

"y": "916"

}, {

"x": "810",

"y": "916"

}, {

"x": "810",

"y": "852"

}]

},

"text": "CROSSING",

"entity_index": "-1"

}]

}]

}],

"languages": [{

"language_code": "en"

}]

}, {

"bounding_box": {

"vertices": [{

"x": "547",

"y": "989"

}, {

"x": "547",

"y": "1046"

}, {

"x": "748",

"y": "1046"

}, {

"x": "748",

"y": "989"

}]

},

"lines": [{

"bounding_box": {

"vertices": [{

"x": "547",

"y": "989"

}, {

"x": "547",

"y": "1046"

}, {

"x": "748",

"y": "1046"

}, {

"x": "748",

"y": "989"

}]

},

"alternatives": [{

"text": "SLOW",

"words": [{

"bounding_box": {

"vertices": [{

"x": "547",

"y": "983"

}, {

"x": "547",

"y": "1054"

}, {

"x": "748",

"y": "1054"

}, {

"x": "748",

"y": "983"

}]

},

"text": "SLOW",

"entity_index": "-1"

}]

}]

}],

"languages": [{

"language_code": "en"

}]

}],

"entities": []

},

"page": "0"

}

}

Response format

Yandex Vision OCR provides recognition results in JSON Lines

Errors in determining coordinates

Coordinates returned by the service may in some cases mismatch the text displayed in the user's image processor. This is due to incorrect handling of exif metadata by the user's image processor.

During recognition, Yandex Vision OCR considers data about image rotation set by the Orientation attribute in the exif section. Some tools used for viewing images may ignore the rotation values set in exif. This causes a mismatch between the obtained results and the displayed image.

To fix this error, do one of the following:

- Change the image processor settings so that the rotation angle specified in the

exifsection is considered while viewing images. - Remove the

Orientationattribute from the imageexifsection or set it to0when providing the image to Yandex Vision OCR.

Recognition accuracy

Recognition accuracy (confidence) represents Yandex Vision OCR's estimated result accuracy. For example, "confidence": 0.9412244558 for the we like you line means that the text is recognized correctly with a probability of 94%.

Currently, the recognition accuracy value is only calculated for lines. You will also see it for words and languages, but it will be borrowed from the line's value.